Hi,

I use iota² python toolchain to launch otb computations on an Ubuntu 20.04 server (56 CPUs @ 2.30GHz, 6 cores each, 300GB RAM).

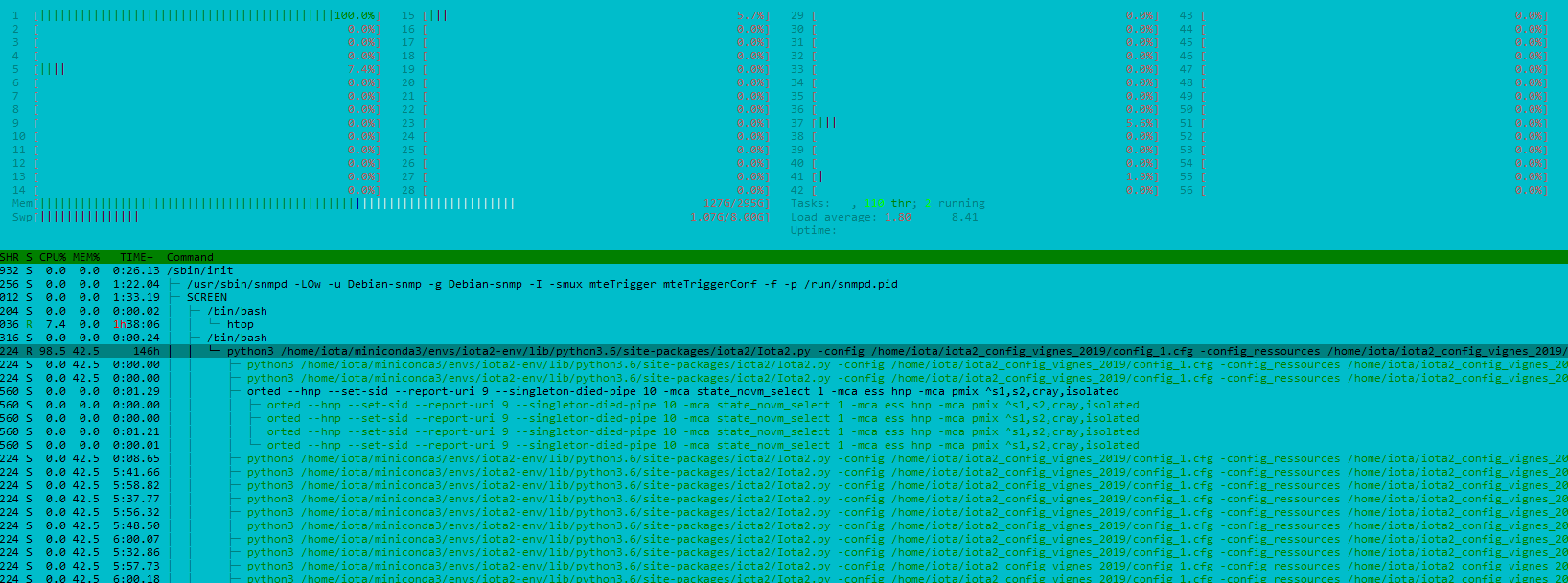

I’m surprised to see that ImageClassifierstep is alternating between monothreaded and multithreaded phases (or between monoprocess and multiprocess phases?).

Even more, the computation takes nearly twice as time on this server than on a simple PC (Fedora, Intel core i5 @ 3.20 GHz, 16GB RAM).

I may be missing an optimization parameter…?

I have set the following:

export GDAL_CACHEMAX=64000

export OTB_MAX_RAM_HINT=200000

Example:

2020-12-08 07:34:23 (INFO) ImageClassifier: Default RAM limit for OTB is 200000 MB

2020-12-08 07:34:23 (INFO) ImageClassifier: GDAL maximum cache size is 64000 MB

2020-12-08 07:34:23 (INFO) ImageClassifier: OTB will use at most 56 threads

2020-12-08 07:34:23 (INFO) ImageClassifier: Loading model

2020-12-08 07:34:23 (INFO) ImageClassifier: Model loaded

2020-12-08 07:34:23 (INFO) ImageClassifier: Input image normalization deactivated.

2020-12-08 07:34:25 (INFO): Estimated memory for full processing: 1.34745e+06MB (avail.: 81920 MB), optimal image partitioning: 17 blocks

2020-12-08 07:34:25 (INFO): File /home/iota/iota2_output_vignes_2019/config_1/classif/Classif_T31TGJ_model_1_seed_4.tif will be written in 18 blocks of 10999x612 pixels

Most of the time is spent in a single processor:

Thanks a lot for your help!