Greetings @remi.cresson

We’ve explored the suggested solutions, but the issue persists. Could we please revisit the solutions proposed earlier for further clarity?

For Solution - 1:

What we are currently doing is we are normalizing input then passing it in PolygonClassStatistics, the output of PolygonClassStatistics goes into SampleSelection and using the output of SampleSelection as input of PatchesExtraction from which we are extracting Patches and Labels.

input = normalized_input # use the normalized input !!

out_patches_A = input.split('.')[0] + "_patches_A.tif"

out_labels_A = input.split('.')[0] + "_labels_A.tif"

out_patches_B = input.split('.')[0] + "_patches_B.tif"

out_labels_B = input.split('.')[0] + "_labels_B.tif"

#--------------------------------------------------------------------------------

apptype = "PolygonClassStatistics"

vec = "area2_0123_2023_raster_classification_13.shp"

output = "area2_0123_2023_raster_classification_13_vecstats.xml"

PolygonClassStatistics(apptype, datapath, input, vec, output)

print("\Stats created")

#------------------------------------------------------------------------------

apptype = "SampleSelection"

instats = "area2_0123_2023_raster_classification_13_vecstats.xml"

output_A = "area2_0123_2023_raster_classification_13_points_A.shp"

output_B = "area2_0123_2023_raster_classification_13_points_B.shp"

SampleSelection(apptype, datapath, input, vec, instats, output_A)

print("\nSamples A created")

SampleSelection(apptype, datapath, input, vec, instats, output_B)

print("\nSamples B created")

#------------------------------------------------------------------------------

out_patches_A = input.split('.')[0] + "_patches_A.tif"

out_labels_A = input.split('.')[0] + "_labels_A.tif"

out_patches_B = input.split('.')[0] + "_patches_B.tif"

out_labels_B = input.split('.')[0] + "_labels_B.tif"

#------------------------------------------------------------------------------

apptype = "PatchesExtraction"

patchsize = 128 #16?

vec_A = output_A

vec_B = output_B

PatchesExtraction(apptype, datapath, input, vec_A, out_patches_A, out_labels_A, patchsize)

print("\nPatches A created")

PatchesExtraction(apptype, datapath, input, vec_B, out_patches_B, out_labels_B, patchsize)

print("\nPatches B created")

The steps that we have currently taken in PatchesExtraction.

- Removing the outlabels field althogether. As suggested.

- Added OTB_TF_NSOURCES = 2 and set all the parameters for “source2.il”, but it’s giving Runtime error.

RuntimeError: Exception thrown in otbApplication Application_SetParameterStringList: /src/otb/otb/Modules/Wrappers/ApplicationEngine/src/otbWrapperParameterGroup.cxx:470:

itk::ERROR: ParameterList(0x5619dee818c0): Could not find parameter source2.il

- Not setting the patchsize to anything, which is also giving RuntimeError of cannot convert patchsizex to int (no value).

"For a SPOT6 image for instance, the patch size can "

"be 64x64 and for an input Sentinel-2 time series the patch size could be "

"1x1. Note that if a dimension size is not defined, the largest one will "

"be used (i.e. input image dimensions"

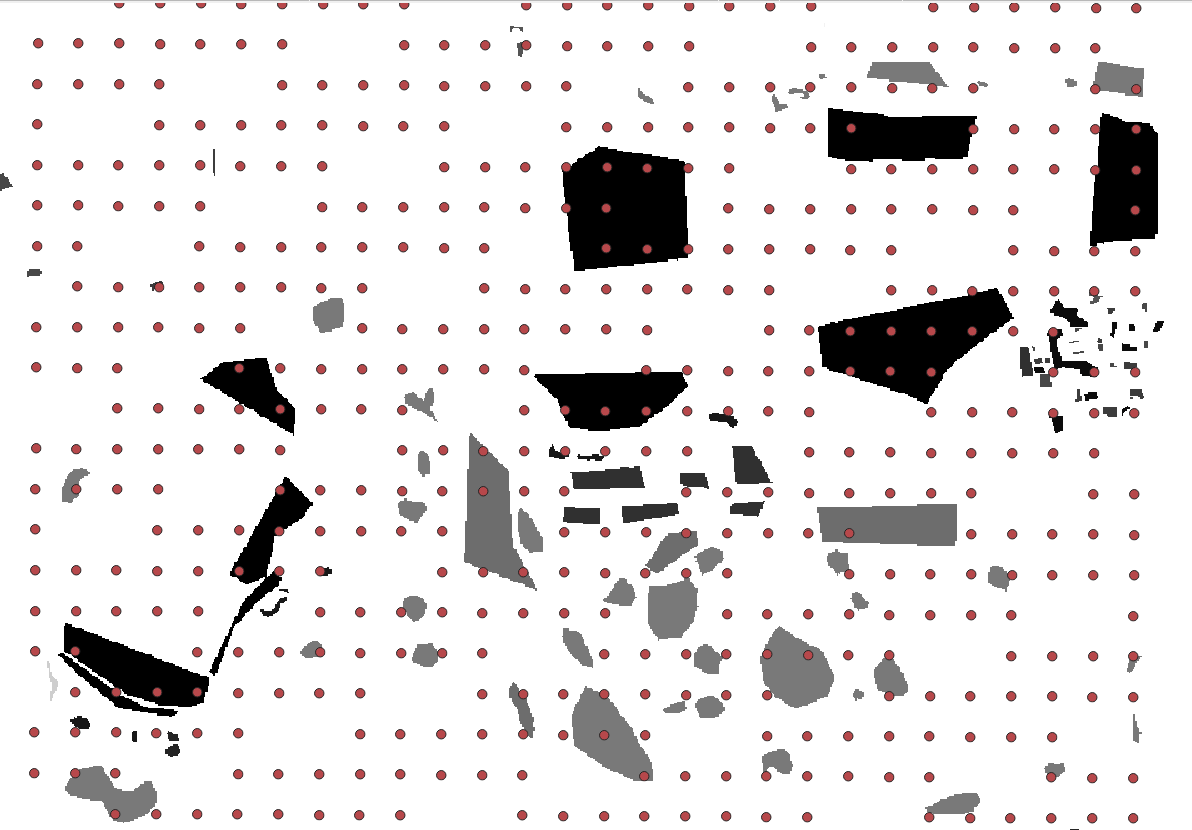

We also tried visualizing patches which is big rectangle something like a building, however the labels visualization is very thin rectangle surmounted on patches’ rectangle, and they are not overlapping as they are supposed to be.

This is how we are using the PatchesExtraction.

def PatchesExtraction(apptype, datapath, input, vec, out_patches, out_labels, patchsize, OTB_TF_NSOURCES = 2):

# trying OTB_TF_Nsources = 2

app = otbApplication.Registry.CreateApplication(apptype)

app.SetParameterStringList("source1.il", [datapath + input])

app.SetParameterInt("source1.patchsizex", patchsize)

app.SetParameterInt("source1.patchsizey", patchsize)

app.SetParameterString("vec", datapath + vec)

app.SetParameterString("field", "class")

app.SetParameterString("source1.out", datapath + out_patches)

# app.SetParameterString("outlabels", datapath + out_labels)

# app.SetParameterStringList("source2.il", [datapath + input])

# app.SetParameterInt("source2.patchsizex", patchsize)

# app.SetParameterInt("source2.patchsizey", patchsize)

# app.SetParameterString("vec", datapath + vec)

# app.SetParameterString("field", "class")

# app.SetParameterString("source2.out", datapath + out_labels)

app.ExecuteAndWriteOutput()

I think we are not correctly implementing the solution 1. Could you please elaborate on how to implement solution 1.

For the solution - 2, with same abovementioned arguments for PatchesExtraction which has patch size = 128, we are somehow getting patches of (8x8x8) and labels are still (1x1x1).

Even before the patches were (8x8x8), we were getting the same error.

def get_outputs(self, normalized_inputs: dict) -> dict:

norm_inp = normalized_inputs[INPUT_NAME]

def _conv(inp, depth, name):

conv_op = tf.keras.layers.Conv2D(

filters=depth,

kernel_size=3,

strides=2,

activation="relu",

padding="same",

name=name

)

return conv_op(inp)

def _tconv(inp, depth, name, activation="relu"):

tconv_op = tf.keras.layers.Conv2DTranspose(

filters=depth,

kernel_size=3,

strides=2,

activation=activation,

padding="same",

name=name

)

return tconv_op(inp)

out_conv5 = _conv(norm_inp, 8, "conv5")

out_conv1 = _conv(out_conv5, 16, "conv1")

out_conv2 = _conv(out_conv1, 32, "conv2")

out_conv3 = _conv(out_conv2, 64, "conv3")

out_conv4 = _conv(out_conv3, 64, "conv4")

out_tconv1 = _tconv(out_conv4, 64, "tconv1") + out_conv3

out_tconv2 = _tconv(out_tconv1, 32, "tconv2") + out_conv2

out_tconv3 = _tconv(out_tconv2, 16, "tconv3") + out_conv1

out_tconv5 = _tconv(out_tconv3, 8, "tconv5") + out_conv5

out_tconv4 = _tconv(out_tconv5, N_CLASSES, "classifier", None)

gap = out_tconv4

# gap = tf.keras.layers.Flatten(gap)

gap = tf.expand_dims(gap, axis=0)

print("Batch size:", gap.shape[0])

print("Height:", gap.shape[1])

print("Width:", gap.shape[2])

print("Channels:", gap.shape[3])

softmax_op = tf.keras.layers.Softmax(name=OUTPUT_SOFTMAX_NAME)

predictions = softmax_op(gap)

predictions = tf.argmax(predictions)

return {TARGET_NAME: predictions}

def create_tfrecords(patches, labels, outdir):

patches = sorted(patches)

labels = sorted(labels)

outdir = Path(outdir)

if not outdir.exists():

outdir.mkdir(exist_ok=True)

#create a dataset

dataset = DatasetFromPatchesImages(

filenames_dict = {

"input_xs_patches":patches,

"labels_patches": labels

}

)

is_eager_execution = tf.executing_eagerly()

print('Is eager execution:', is_eager_execution)

dataset.to_tfrecords(output_dir=outdir, drop_remainder=False)

#----Main------------------------------------------------------------

if __name__=="__main__":

datapath = "/home/otbuser/all/data/"

batch_size = 5

learning_rate = 0.0001

nb_epochs = 5

# create TFRecords

tf.compat.v1.enable_eager_execution()

patches = ['/home/otbuser/all/data/area2_0530_2022_8bands_patches_A.tif', '/home/otbuser/all/data/area2_0530_2022_8bands_patches_B.tif']

labels = ['/home/otbuser/all/data/area2_0530_2022_8bands_labels_A.tif', '/home/otbuser/all/data/area2_0530_2022_8bands_labels_B.tif']

create_tfrecords(patches=patches[0:1], labels=labels[0:1], outdir=datapath+"train")

create_tfrecords(patches=patches[1:], labels=labels[1:], outdir=datapath+"valid")

# Train the model and save the model

train_dir = os.path.join(datapath, "train")

valid_dir = os.path.join(datapath, "valid")

test_dir = None # define the training directory if test dataset is available

kwargs = {

"batch_size": batch_size,

"target_keys": [TARGET_NAME],

"preprocessing_fn": dataset_preprocessing_fn

}

#shuffle_buffer_size=1000,

ds_train = TFRecords(train_dir).read(**kwargs)

ds_valid = TFRecords(valid_dir).read(**kwargs)

train(datapath+"sandbox_model", batch_size, learning_rate, nb_epochs, ds_train, ds_valid, ds_test=None)

tf.compat.v1.disable_eager_execution()

For solution - 2, these are the change we made.

- Removed the Global Average Pooling layer. As suggested.

- We were getting error if we were not expanding dimension before softmax layer.

This is where we were getting error in postprocess_outputs() in model.py

cropped = out_tensor[:, crop:-crop, crop:-crop, :] which i think because of compatibility issue of TF! and TF2.

This is the output and error.

2023-10-20 07:07:14 INFO Number of samples: 3980

2023-10-20 07:07:14 INFO output_types: {'input_xs_patches': tf.float32, 'labels_patches': tf.uint8}

2023-10-20 07:07:14 INFO output_shapes: {'input_xs_patches': (8, 8, 8), 'labels_patches': (1, 1, 1)}

Is eager execution: True

2023-10-20 07:07:15 INFO 3980 samples

100%|█████████████████████████████████████████████████████████████████████████████████████████| 40/40 [00:00<00:00, 46.86it/s]

2023-10-20 07:07:16 INFO Number of samples: 3980

2023-10-20 07:07:16 INFO output_types: {'input_xs_patches': tf.float32, 'labels_patches': tf.uint8}

2023-10-20 07:07:16 INFO output_shapes: {'input_xs_patches': (8, 8, 8), 'labels_patches': (1, 1, 1)}

Is eager execution: True

2023-10-20 07:07:16 INFO 3980 samples

100%|█████████████████████████████████████████████████████████████████████████████████████████| 40/40 [00:00<00:00, 45.51it/s]

2023-10-20 07:07:16 INFO Searching TFRecords in /home/otbuser/all/data/train/*.records...

2023-10-20 07:07:16 INFO Number of matching TFRecords: 40

2023-10-20 07:07:16 INFO Reducing number of records to : 40

2023-10-20 07:07:17 INFO Searching TFRecords in /home/otbuser/all/data/valid/*.records...

2023-10-20 07:07:17 INFO Number of matching TFRecords: 40

2023-10-20 07:07:17 INFO Reducing number of records to : 40

WARNING:tensorflow:There are non-GPU devices in `tf.distribute.Strategy`, not using nccl allreduce.

2023-10-20 07:07:17 WARNING There are non-GPU devices in `tf.distribute.Strategy`, not using nccl allreduce.

INFO:tensorflow:Using MirroredStrategy with devices ('/job:localhost/replica:0/task:0/device:CPU:0',)

2023-10-20 07:07:17 INFO Using MirroredStrategy with devices ('/job:localhost/replica:0/task:0/device:CPU:0',)

2023-10-20 07:07:17 INFO Dataset input element spec: {'input_xs': TensorSpec(shape=(5, 8, 8, 8), dtype=tf.float32, name=None)}

2023-10-20 07:07:17 INFO Found dataset input keys: ['input_xs']

2023-10-20 07:07:17 INFO Inputs shapes: {'input_xs': TensorShape([Dimension(8), Dimension(8), Dimension(8)])}

2023-10-20 07:07:17 INFO Inference cropping values: [16, 32, 64, 96, 128]

2023-10-20 07:07:17 INFO Original shape for input input_xs: [Dimension(8), Dimension(8), Dimension(8)]

2023-10-20 07:07:17 INFO New shape for input input_xs: [None, None, Dimension(8)]

2023-10-20 07:07:17 INFO Model inputs: {'input_xs': <KerasTensor: shape=(?, ?, ?, 8) dtype=float32 (created by layer 'input_xs')>}

2023-10-20 07:07:17 INFO Normalized model inputs: {'input_xs': <KerasTensor: shape=(?, ?, ?, 8) dtype=float32 (created by layer 'tf.math.multiply')>}

Batch size: 1

Height: ?

Width: ?

Channels: ?

2023-10-20 07:07:17 INFO Model outputs: {'predictions': <KerasTensor: shape=(?, ?, ?, 20) dtype=int64 (created by layer 'tf.math.argmax')>}

2023-10-20 07:07:17 INFO Adding extra output for tensor predictions with crop 16 (tf.math.argmax_crop16)

2023-10-20 07:07:17 INFO Adding extra output for tensor predictions with crop 32 (tf.math.argmax_crop32)

2023-10-20 07:07:17 INFO Adding extra output for tensor predictions with crop 64 (tf.math.argmax_crop64)

2023-10-20 07:07:17 INFO Adding extra output for tensor predictions with crop 96 (tf.math.argmax_crop96)

2023-10-20 07:07:17 INFO Adding extra output for tensor predictions with crop 128 (tf.math.argmax_crop128)

WARNING:tensorflow:From /home/otbuser/all/code/cocktail/sandbox/otbtf/combined_model.py:294: The name tf.keras.optimizers.Adam is deprecated. Please use tf.keras.optimizers.legacy.Adam instead.

2023-10-20 07:07:17 WARNING From /home/otbuser/all/code/cocktail/sandbox/otbtf/combined_model.py:294: The name tf.keras.optimizers.Adam is deprecated. Please use tf.keras.optimizers.legacy.Adam instead.

Model: "FCNNModel"

______________________________________________________________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

======================================================================================================================================================

input_xs (InputLayer) [(None, None, None, 8)] 0 []

tf.cast (TFOpLambda) (None, None, None, 8) 0 ['input_xs[0][0]']

tf.math.multiply (TFOpLambda) (None, None, None, 8) 0 ['tf.cast[0][0]']

conv5 (Conv2D) (None, None, None, 8) 584 ['tf.math.multiply[0][0]']

conv1 (Conv2D) (None, None, None, 16) 1168 ['conv5[0][0]']

conv2 (Conv2D) (None, None, None, 32) 4640 ['conv1[0][0]']

conv3 (Conv2D) (None, None, None, 64) 18496 ['conv2[0][0]']

conv4 (Conv2D) (None, None, None, 64) 36928 ['conv3[0][0]']

tconv1 (Conv2DTranspose) (None, None, None, 64) 36928 ['conv4[0][0]']

tf.__operators__.add (TFOpLambda) (None, None, None, 64) 0 ['tconv1[0][0]',

'conv3[0][0]']

tconv2 (Conv2DTranspose) (None, None, None, 32) 18464 ['tf.__operators__.add[0][0]']

tf.__operators__.add_1 (TFOpLambda) (None, None, None, 32) 0 ['tconv2[0][0]',

'conv2[0][0]']

tconv3 (Conv2DTranspose) (None, None, None, 16) 4624 ['tf.__operators__.add_1[0][0]']

tf.__operators__.add_2 (TFOpLambda) (None, None, None, 16) 0 ['tconv3[0][0]',

'conv1[0][0]']

tconv5 (Conv2DTranspose) (None, None, None, 8) 1160 ['tf.__operators__.add_2[0][0]']

tf.__operators__.add_3 (TFOpLambda) (None, None, None, 8) 0 ['tconv5[0][0]',

'conv5[0][0]']

classifier (Conv2DTranspose) (None, None, None, 20) 1460 ['tf.__operators__.add_3[0][0]']

tf.expand_dims (TFOpLambda) (1, None, None, None, 20) 0 ['classifier[0][0]']

predictions_softmax_tensor (Softmax) (1, None, None, None, 20) 0 ['tf.expand_dims[0][0]']

tf.math.argmax (TFOpLambda) (None, None, None, 20) 0 ['predictions_softmax_tensor[0][0]']

tf.__operators__.getitem_4 (SlicingOpLambda) (None, None, None, 20) 0 ['tf.math.argmax[0][0]']

tf.__operators__.getitem (SlicingOpLambda) (None, None, None, 20) 0 ['tf.math.argmax[0][0]']

tf.__operators__.getitem_1 (SlicingOpLambda) (None, None, None, 20) 0 ['tf.math.argmax[0][0]']

tf.__operators__.getitem_2 (SlicingOpLambda) (None, None, None, 20) 0 ['tf.math.argmax[0][0]']

tf.__operators__.getitem_3 (SlicingOpLambda) (None, None, None, 20) 0 ['tf.math.argmax[0][0]']

tf.math.argmax_crop128 (Activation) (None, None, None, 20) 0 ['tf.__operators__.getitem_4[0][0]']

tf.math.argmax_crop16 (Activation) (None, None, None, 20) 0 ['tf.__operators__.getitem[0][0]']

tf.math.argmax_crop32 (Activation) (None, None, None, 20) 0 ['tf.__operators__.getitem_1[0][0]']

tf.math.argmax_crop64 (Activation) (None, None, None, 20) 0 ['tf.__operators__.getitem_2[0][0]']

tf.math.argmax_crop96 (Activation) (None, None, None, 20) 0 ['tf.__operators__.getitem_3[0][0]']

======================================================================================================================================================

Total params: 124,452

Trainable params: 124,452

Non-trainable params: 0

______________________________________________________________________________________________________________________________________________________

2023-10-20 07:07:17.861851: I tensorflow/core/common_runtime/executor.cc:1197] [/device:CPU:0] (DEBUG INFO) Executor start aborting (this does not indicate an error and you can ignore this message): INVALID_ARGUMENT: You must feed a value for placeholder tensor 'Placeholder/_0' with dtype string and shape [40]

[[{{node Placeholder/_0}}]]

2023-10-20 07:07:17.862284: I tensorflow/core/common_runtime/executor.cc:1197] [/device:CPU:0] (DEBUG INFO) Executor start aborting (this does not indicate an error and you can ignore this message): INVALID_ARGUMENT: You must feed a value for placeholder tensor 'Placeholder/_0' with dtype string and shape [40]

[[{{node Placeholder/_0}}]]

Epoch 1/5

INFO:tensorflow:Error reported to Coordinator: Exception encountered when calling layer 'tf.__operators__.add_2' (type TFOpLambda).

Dimensions must be equal, but are 8 and 2 for '{{node FCNNModel/tf.__operators__.add_2/AddV2}} = AddV2[T=DT_FLOAT](FCNNModel/tconv3/Relu, FCNNModel/conv1/Relu)' with input shapes: [5,8,8,16], [5,2,2,16].

Call arguments received by layer 'tf.__operators__.add_2' (type TFOpLambda):

• x=tf.Tensor(shape=(5, 8, 8, 16), dtype=float32)

• y=tf.Tensor(shape=(5, 2, 2, 16), dtype=float32)

• name=None

Traceback (most recent call last):

File "/opt/otbtf/lib/python3/dist-packages/tensorflow/python/training/coordinator.py", line 293, in stop_on_exception

yield

As you can see the mismatch between x and y, which remains the same no matter what batch size, what input.

x = (5, 8, 8, 16), y = (5, 2, 2, 16)

We also tried tweaking the layers to have “valid” padding which was giving error on 2nd conv layer.

We also tried changing some other parameters and tried making smaller Unet as well.

All the things were pointing in the same error. (mismatch between x and y).

Any assistance would be greatly appreciated as we have encountered this issue for quite some time.